Can we prevent the Robo-Apocalypse?

Von Joanna Bostock

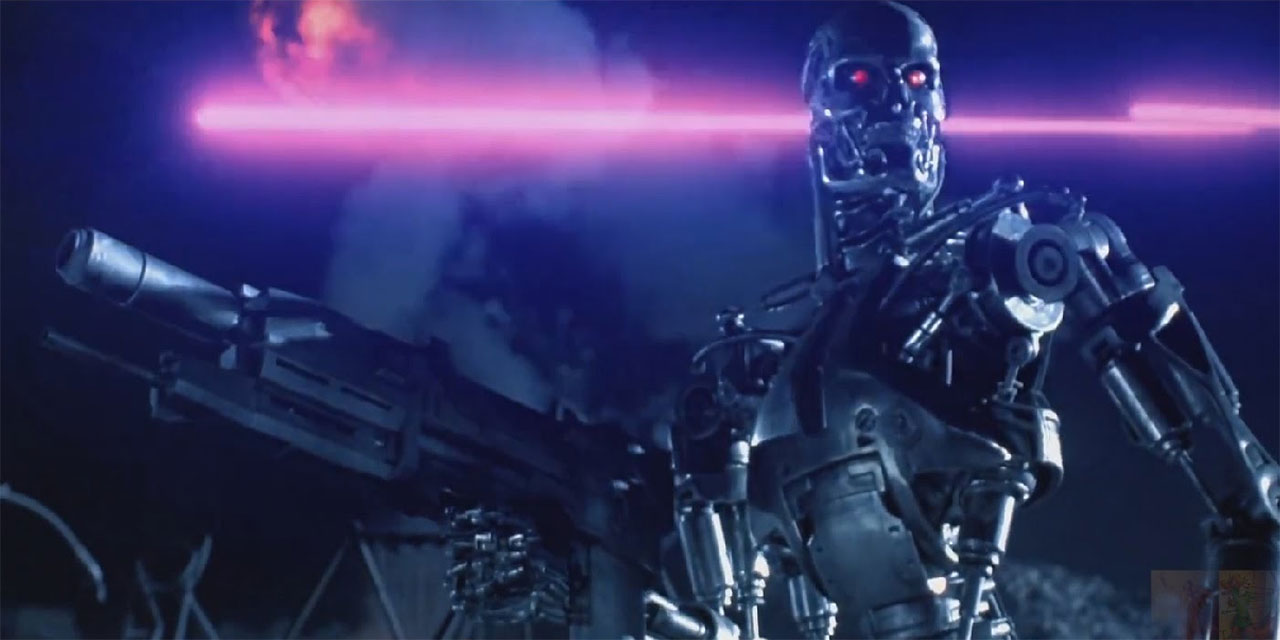

It’s tempting to reference (as many others have) the Terminator films when writing about the topic of lethal autonomous weapons systems (LAWS) because it’s an easy way of getting straight to the point of what we’re talking about – killer robots.

That’s the term chosen by the campaign to ban them, a coalition of NGOs coordinated by Human Rights Watch. The campaign started in 2013 in response to the use of autonomy and artificial intelligence in military applications, and what Mary Wareham, advocacy director of the Arms Division at HRW and the coordinator the Campaign to Stop Killer Robots, calls “significant investments into weaponising autonomy”.

“We saw enough evidence of these investments and of the military plans that accompanied them talking about full autonomy as an ultimate objective that we decided it was time for civil society to come together … to seek legislation to prevent the development of autonomous weapons that would lack human control in their critical functions, that is the identification and selection of targets and the use of force to fire against them, lethal or otherwise”, says Wareham.

What’s in a name?

Some would say the term “killer robots” is intended to stigmatise and doesn’t capture the complexity of the issue of autonomy, but Wareham says the choice was deliberately chosen to make the campaign’s message more accessible, “after all lethal means killer and an autonomous thing is a robot."

"We’re aware that the term has been used in Hollywood and other artistic endeavours over the decades, but we’re applying it to a very real-world concern about autonomy in weapons systems and in the use of force and where we draw the normative line there. We have tried to give the public and governments a sense that this is a very dangerous future to go down if we do not regulate now.”

twitter.com/BanKillerRobots

So what exactly are we talking about? To what extent do autonomous weapons already exist? Who has them and what are they for?

Guns, drones and missiles

Automatic weapons have existed for decades – just think of the machine gun, which on the battlefields in World War I accounted for 85% of total soldiers’ deaths. In war or peace, militaries are engaged in a constant battle to stay ahead, or at least abreast, of (potential) adversaries, so it’s no surprise that they are taking advantage of increasingly sophisticated technology to develop ways to become more efficient and more effective.

In his book “Army of None: Autonomous Weapons and the Future of War” Paul Scharre writes: “Militaries around the globe are racing to deploy robots at sea, on the ground and in the air – more than ninety countries have drones patrolling the skies. These robots are increasingly autonomous and many are armed. They operate under human control for now, but what happens when a Predator drone has as much autonomy as a Google car?”

Scharre is a fellow at the Centre for a New American Security in Washington DC and a former US Army Ranger who helped developed some of the policy guidance at the Pentagon. In his book he explores the complexity of the term “autonomous” and different levels of human involvement - is a human IN the loop (making the decisions)? ON the loop (supervising and able to intervene)? or OUT of the loop altogether?

Scharre describes weapons systems that already exist or are being developed, like the Long Range Anti-Ship Missile (LRASM), which is fired by a human into a defined area, but autonomously identifies and attacks targets within that area and can detect and avoid attempts to shoot it down.

There are also projects exploring things like “swarm warfare” using small drones and the US has an entire agency of scientists “creating breakthrough technologies and capabilities for national security”.

These are close-to-home US examples, but it doesn’t stop there. South Korea’s robot border sentries and Russia’s robot talks are among others mentioned, as is Israel and its Harpy drone, which has already “crossed the line to full autonomy” and “unlike the Predator drone, which is controlled by a human, can search a wide area for enemy radars and, once it finds one, destroy it without asking permission.”

A bleak future?

Mary Wareham states that “none of the existing and upcoming weapons systems would meet our criteria of a killer robot yet” but returning to our “Terminator” reference, could the grim, destructive visions of Hollywood and science fiction literature become a reality?

Paul Scharre reassures us that „even the most sophisticated machine intelligence today is a far cry from the sentient AIs depicted in science fiction.“ He goes on to explore the thinking of some of the biggest names in the field on the subject of advanced AI, neural networks, deep learning and artificial general intelligence (AGI), „a hypothetical future AI that would exhibit human-level intelligence across the full range of cognitive tasks“.

Examining dystopian popular science fiction scenarios through the lenses of experts’ theories Scharre links our concerns about the development of AI to the „Frankenstein complex“ - the fear that „hubris can lead to uncontrollable creations“. In other words, it’s normal to be afraid. Scharre concludes that he isn’t worried by the AI bad guys in science fiction, but does find one particular example of machine learning rather chilling:

The dystopian Hollywood scenario of SkyNet and Terminators is not a future the Campaign to Ban Killer Robots seeks to depict, but clearly the element of drama tapping into people’s already existing questions, if not fears, about AI will help capture the attention of a wider public.

LAWS and the law

Fully autonomous weapons would, according to a report by the Coalition to Ban Killer Robots, "violate what is known as the Martens Clause, a long-standing provision of international humanitarian law which requires emerging technologies to be judged by the principles of humanity and the dictates of public conscience when they are not already covered by other treaty provisions”.

The paper was published ahead of a meeting of 70 governments this week at the United Nations in Geneva to discuss the challenges raised by lethal autonomous weapons systems.

Stop killer robots

Campaign to Stop Killer Robots action outside the UN

Scharre cites an example from his own personal experience from his tour of duty in Afghanistan: he and his sniper team were near the border with Pakistan looking for routes where Taliban fighters might cross, when a girl aged five or six, herding goats, walked from the village towards and around them. They realised she was carrying a radio and reporting on their position to the Taliban. Taliban fighters appeared, there was a firefight, some Taliban were killed, the others ran away and the Americans were exfiltrated.

The girl had left the scene unharmed before the Taliban arrived. “The laws of war don’t set an age for combatants” writes Sharre. “Behaviour determines whether or not a person is a combatant. What would a machine have done in our place? If it has been programmed to kill lawful enemy combatants, it would have attacked the little girl. Would a robot know when it is lawful to kill, but wrong?”

HRW points to existing international treaties such as those to ban land mines and cluster munitions, or chemical and biological weapons, as examples of successful bans. But the Campaign to Ban Killer Robots presents a very different case because there is no common understanding of what exactly autonomous weapons are.

Sharre identifies further challenges for the campaign: a lack of clarity about possible future scenarios; ambiguity in describing the issues and especially the vagueness of the term “meaningful human control”; the fact that it is led by NGOs and not states; its humanitarian approach which ignores the strategic concerns of major military powers, and the fact that these powers are not among the handful of states which have expressed support for a ban.

Stop killer robots

Mary Wareham acknowledges there are challenges but says things are “starting to change” and that progress has been made in meetings up to now: “governments keep returning to this notion of human control. In May the United Nations Secretary General, António Guterres, offered to support states to agree to new measures to ensure that human control over the use of force is retained going forward. That was a strong signal from the UN … and all the various expressions of support in recent years are evidence that the public is deeply uncomfortable with the notion of creating a killer robot and would like to see them regulated.“

Publiziert am 31.08.2018